Metaphoraction: Support Gesture-based Interaction Design with Metaphorical Meanings

We present Metaphoraction, a creativity support tool that formulates design ideas for gesture-based interactions. The tool assists designers in creating metaphorical meanings to interaction design by interconnecting four components: gesture, action, object, and meaning. To represent the interaction design ideas with these four components, Metaphoraction links interactive gestures to actions based on the similarity of appearances, movements, and experiences; relates actions to objects by applying the immediate association; bridges objects and meanings by leveraging the metaphor TARGET-SOURCE mappings.

Previous user experience research emphasizes meaning in interaction design beyond conventional interactive gestures. However, existing exemplars that successfully reify abstract meanings through interactions are usually case-specific, and it is currently unclear how to systematically create or extend meanings for general gesture-based interactions. We present Metaphoraction, a creativity support tool that formulates design ideas for gesture-based interactions to show metaphorical meanings with four interconnected components: gesture, action, object, and meaning. To represent the interaction design ideas with these four components, Metaphoraction links interactive gestures to actions based on the similarity of appearances, movements, and experiences; relates actions to objects by applying the immediate association; bridges objects and meanings by leveraging the metaphor TARGET-SOURCE mappings. We build a dataset containing 588,770 unique design idea candidates through surveying related research and conducting two crowdsourced studies to support meaningful gesture-based interaction design ideation. Five design experts validate that Metaphoraction can effectively support creativity and productivity during the ideation process. The paper concludes by presenting insights into meaningful gesture-based interaction design and discussing potential future uses of the tool.

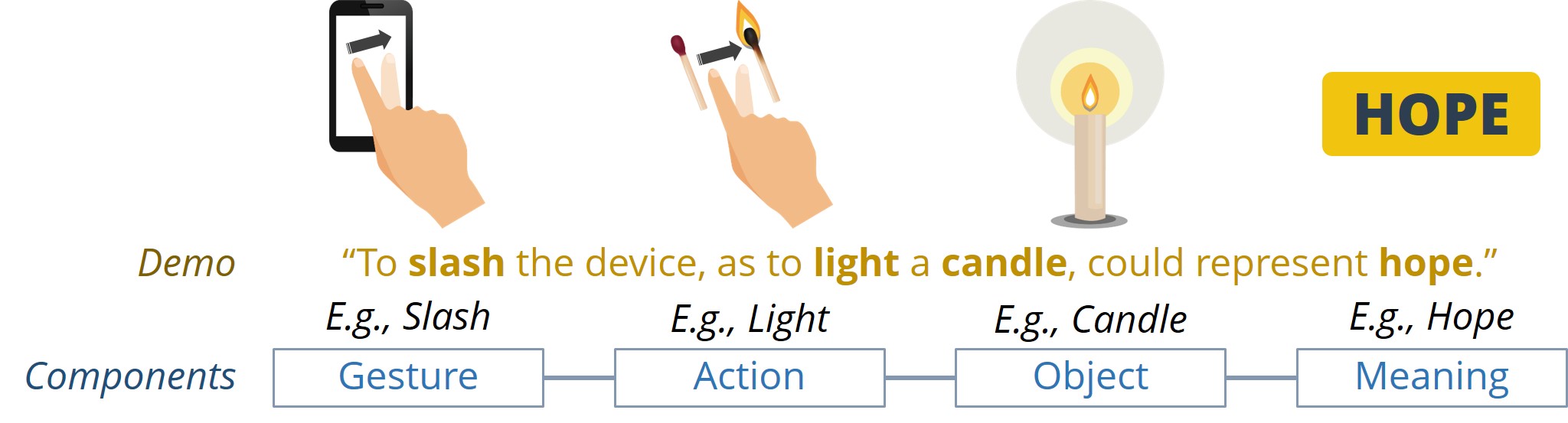

The Components of Metaphoraction.

An overview of Metaphoraction construction with four components: gesture, action, object, and meaning.

Specifically, we define:

- Gesture: an operation people perform to activate functions or effects in a digital system;

- Action: a process or movement to achieve a particular thing in everyday life;

- Object: a thing to which a specified action is directed; and

- Meaning: a message that is intended, expressed, or signified.

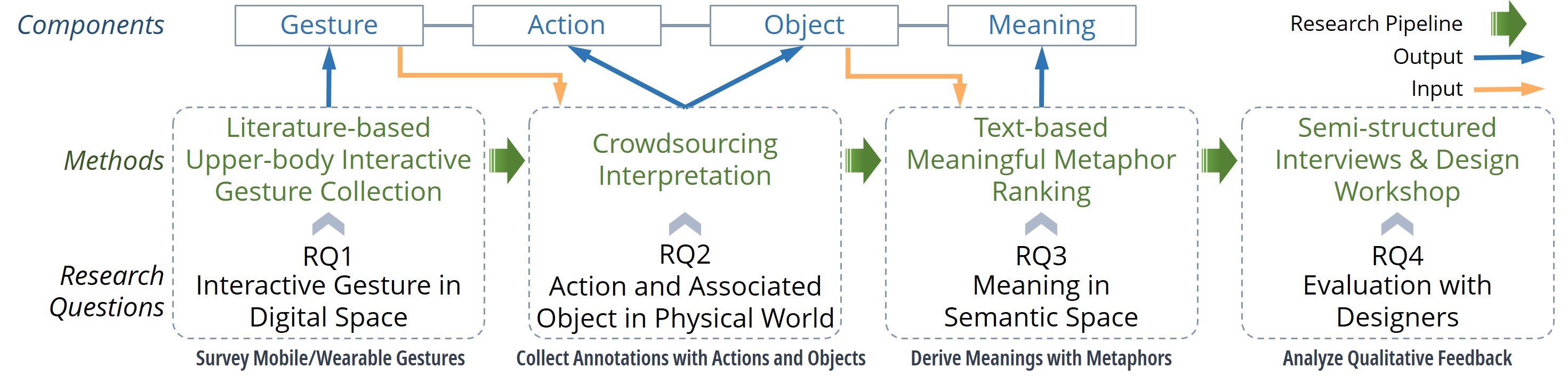

Research Pipeline

An overall research pipeline of Metaphoraction.

RP1: We conduct a literature survey to compile a list of 52 widely accepted, lightweight interactive gestures supported by commercially available devices or off-the-shelf wearable/mobile sensors.

RP2: We carry out a crowdsourced study to translate every given interactive gesture into a set of daily actions performed on associated objects by removing the presence of the digital system.

RP3: We exploit a large metaphor dataset mined from web resources to infer possible messages conveyed from the objects into meanings.

RP4: We validate the interface design of Metaphoraction with university students with design backgrounds and conduct a design workshop with design experts from a local company.

Application

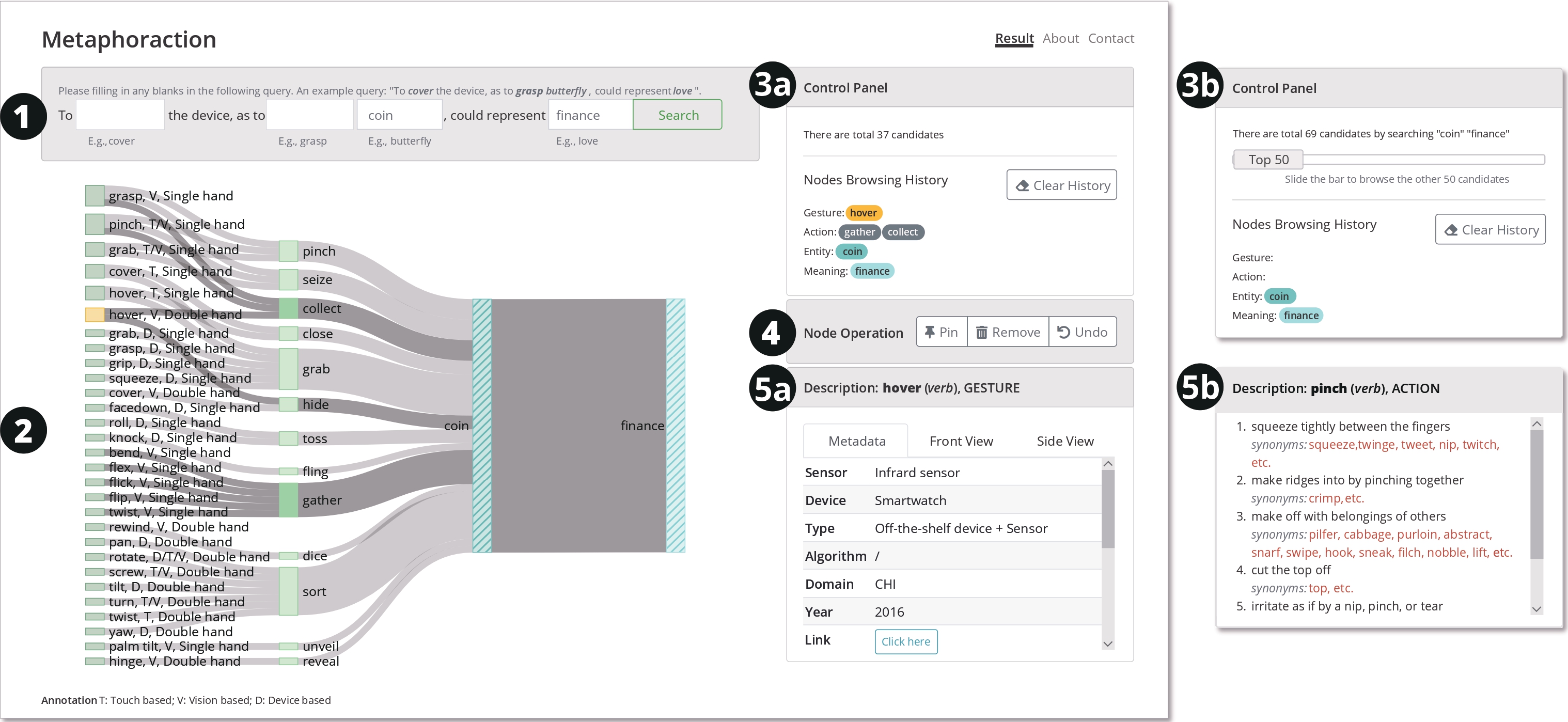

The interface of Metaphoraction. ➊ is the search box; ➋ is the Sankey diagram visualizing the possible design idea candidates; ➌ is the control panel; ➍ is the node operation panel; ➎ is the description view.

This creativity support tool aims to fulfill the following tasks:

T1: Support searching for random keywords in any components;

T2: Demonstrate the relationship between different components based on query results;

T3: Enable multi-directional and multifaceted exploration of all the components; and

T4: Track browsing history for user reference.

The data and code are available on Github.

Reflections

This work presents Metaphoraction, a creativity support tool for gesture-based interaction design with metaphorical meanings. This tool supports designers in systematically exploring the metaphorical meaning of gesture-based interactions; in other words, it provides a web interface to facilitate the exploration of design idea candidates, consisting of four distinct components, i.e., gesture, action, object, and meaning. We connect these four components through three steps. First, we conduct a literature survey of 71 papers on commonly adopted upper-body mobile/wearable interactive gestures. Second, we invite crowd workers to translate those interactive gestures into daily actions plus associated objects based on their similar appearances, movements, and experiences when performing those actions. Third, we explore the potential extended meanings of those objects through metaphorical mappings with probabilities predicted based on crowdsourced ratings. Experts from our design workshop suggest that Metaphoraction can support the ideation of meaningful gesture-based interactions with improved productivity and creativity. We discuss how our insights evolved into a meaningful interaction design and present future work which could further empower interaction designers.

Zhida Sun, Sitong Wang, Chengzhong Liu, Xiaojuan Ma, Metaphoraction: Support Gesture-based Interaction Design with Metaphorical Meanings, ACM Transactions on Computer-Human Interaction (ToCHI), 2022